I am very excited about the new Solr 5. I had the opportunity to download and install the latest release, and I have to say that I am impressed with the work that has been done to make Solr easy and fun to use right out of the box.

I am very excited about the new Solr 5. I had the opportunity to download and install the latest release, and I have to say that I am impressed with the work that has been done to make Solr easy and fun to use right out of the box.

When I first looked at the bin folder, I noticed that the ./bin/solr script from Solr 4.10.x was still there, but when I checked the help for that command, I noticed that there are new parameters. In Solr 4.10, we only had the following parameters: start, stop, restart, and healthcheck. Now in Solr 5.0, we have additional options that make life a little easier: status, create, create_core, create_collection, and delete.

The create_core and the create_collection are self explanatory. What is interesting is that the create parameter is smart enough to detect the mode in which mode Solr is running; i.e., “Solr Cloud” or “Solr Core” mode. It can then create the proper core or collection.

The status parameter returns a JSON formatted answer that looks like the following. It could be used by a tool like Nagios or JEF Monitor to do some remote monitoring.

Found 1 Solr nodes:

Solr process 6922 running on port 8983

{

"solr_home":"/Applications/solr-5.0.0/server/solr/",

"version":"5.0.0 1659987 - anshumgupta - 2015-02-15 12:26:10",

"startTime":"2015-02-27T17:19:22.455Z",

"uptime":"0 days, 0 hours, 2 minutes, 18 seconds",

"memory":"53.1 MB (%10.8) of 490.7 MB"}

Solr Core demo

Since version 4.10, the /bin/solr start command has a parameter that lets you test Solr with few interesting examples: -e <example>.. To run Solr Core with sample data in 4.10, you would run the following command: ./bin/solr start -e default. That would give you an example of what could be done with a Solr search engine. In version 5.0, the default option has been replaced by the option ./bin/solr start -e techproducts. That new option illustrates many of the Solr Core capabilities.

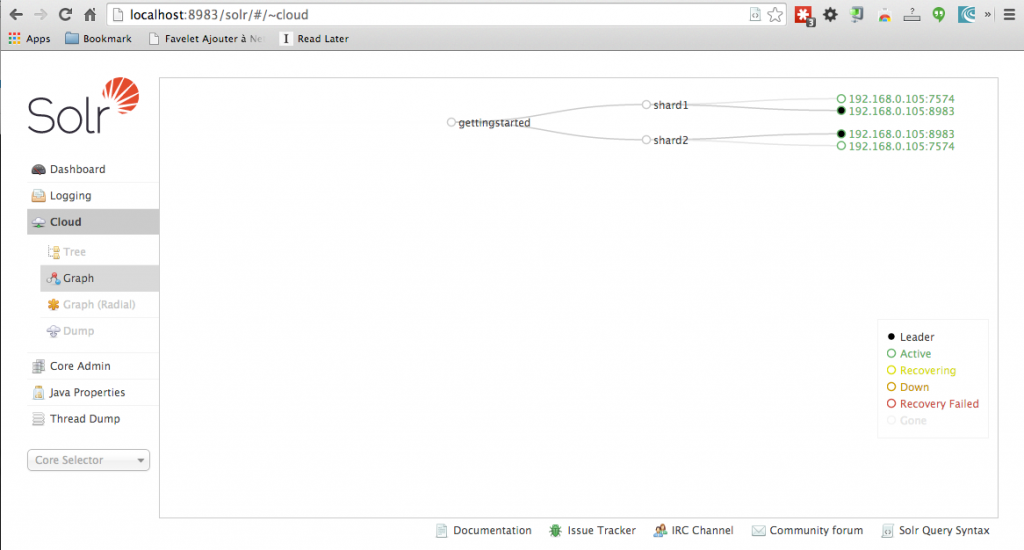

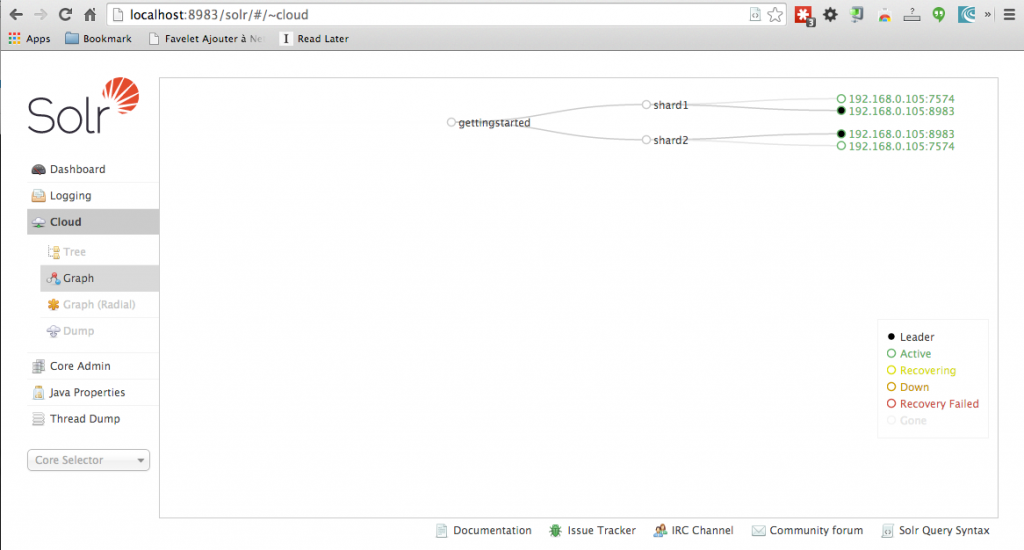

Solr Cloud demo

Configuring a Solr Cloud used to be a very complicated process. Several moving pieces needed to be put together perfectly to configure a working Solr Cloud server. Solr 5.0 still has the ./bin/solr start -e cloud present in 4.10. This option lets you create a Solr Cloud instance by answering a few questions driven by a wizard. You can see an example of the type of questions asked below.

Welcome to the SolrCloud example!

This interactive session will help you launch a SolrCloud cluster on your local workstation.

To begin, how many Solr nodes would you like to run in your local cluster? (specify 1-4 nodes) [2]

Ok, let's start up 2 Solr nodes using for your example SolrCloud cluster.

...

Now let's create a new collection for indexing documents in your 2-node cluster.

Please provide a name for your new collection: [gettingstarted]

gettingstarted

How many shards would you like to split gettingstarted into? [2]

2

How many replicas per shard would you like to create? [2]

2

...

SolrCloud example running, please visit http://localhost:8983/solr

Finally, a script to install Solr as service

Solr now has a script named install_solr_service.sh that installs Solr as a service on Linux and Unix machines. When I tested Solr 5, I ran the code from a Mac os box, so the script did not work for me. I received an error message telling me my Linux distribution was not supported and I needed to setup Solr as a service manually using the documentation provided in the Solr Reference Guide. Even if the install script did not work for me on a Mac, this tool is a great addition for system administrators who like to configure their machines using automated tools like Puppets.

We use Tomcat at work, so where did my WAR go?

As of Solr 5.0, the only supported container is the Jetty one that ships by default with the download file. It is possible to repackage the exploded files into a war, but you will end up with an unsupported installation of Solr. I cannot recommend that route.

Adding document has never been easier

In Solr 5.0, adding documents has never been easier. We now have access to a new tool named ./bin/post. This tool can take almost any input document imaginable and post it to Solr. It has support for JSON, XML, CSV, and rich text documents like Microsoft Office documents. The post tool can also act as a crawler to extract information out of a website. During my test, I was not able to get the content off of a web page. The information extracted was meta-data like the title, authors, and keywords. Maybe there is a way to obtain this content, but I was not able to find a parameter or a config file that would let me do so. I think that the post utility is a very good tool to get started, but for my day to day work, I will stick with our good old open source crawler and Solr Commiter that we use here at Norconex.

Here is a quick list of the parameters one can use from the post command:

* JSON file: ./post -c wizbang events.json

* XML files: ./post -c records article*.xml

* CSV file: ./post -c signals LATEST-signals.csv

* Directory of files: ./post -c myfiles ~/Documents

* Web crawl: ./post -c gettingstarted http://lucidworks.com -recursive 1 -delay 1

* Standard input (stdin): echo ‘{commit: {}}’ | ./post -c my_collection -type application/json -out yes -d

* Data as string: ./post -c signals -type text/csv -out yes -d $’id,value\n1,0.47′

Solr 5.0 supports even more document types thanks to Tika 1.7

Solr 5 now comes with Tika 1.7. This means that Solr now has support for OCR via the Terrasact application. You will need to install Terrasact separately. With Tika 1.7, Solr also has better support for PST and matlab files. The date and spatial unit handling also have been improved in this new release.

More Exciting new features

Solr 5.0 now lets you slice and dice your data the way you want it. What this means is stats and facets are now working together. For example, you can automatically get the min, max, and average price for a book. You can find more about this new feature here.

The folks at Apache also improved the schema API to let us add fields programmatically. A core reload will be done automatically if you use the API. Check out the details on how to use that feature.

We can also manage the request handler via the API.

What are the main “gotchas” to look for when upgrading to Solr 5.0?

Solr 5 does not support reading Solr/Lucene 3.x and earlier indexes. You have to make sure that you run the tool Lucene IndexUpdate that is included with the Solr 4.10 release. Another way to go about it would be to fully optimise your index with a Solr 4.10 installation.

Solr 5 does not support the pre Solr 4.3 solr.xml format and move entirely to core discovery. If you need some more information about moving to the latest and greatest solr.xml file format, I suggest this article: moving to the new solr.xml.

Solr 5 only supports creating and removing SolrCloud collections through the Collection API. You might still be able to manage the collection the former way, but there is no guarantee that it will work in future releases, and the documentation strongly advises against it.

Conclusion

It looks like most of the work done in this release was geared toward ease of use. The inclusion of tools to easily add data to the index with a very versatile script was encouraging. I also liked the idea of moving to a Jetty-only model and approaching Solr as a self-contained piece of software. One significant advantage of going this route is that it will make providing support easier for the Solr team, who will also be able to optimise the code for a specific container.

I am very excited about the new Solr 5. I had the opportunity to download and install the latest release, and I have to say that I am impressed with the work that has been done to make Solr easy and fun to use right out of the box.

I am very excited about the new Solr 5. I had the opportunity to download and install the latest release, and I have to say that I am impressed with the work that has been done to make Solr easy and fun to use right out of the box.

Norconex Commons Lang

Norconex Commons Lang