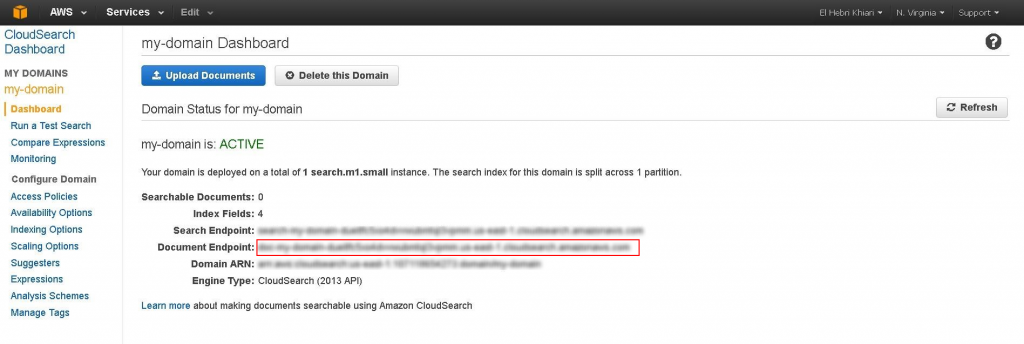

Norconex has released version 2.6.0 of its HTTP Collector web crawler! Among new features, an upgrade of its Importer module brings new document parsing and manipulating capabilities. Some of the changes highlighted here also benefit the Norconex Filesystem Collector.

New URL normalization to remove trailing slashes

[ezcol_1half]

The GenericURLNormalizer has a new pre-defined normalization rule: “removeTrailingSlash”. When used, it makes sure to remove forward slash (/) found at the end of URLs so such URLs are treated the same as those not ending with such character. As an example:

https://norconex.com/will becomehttps://norconex.comhttps://norconex.com/blah/will becomehttps://norconex.com/blah

It can be used with the 20 other normalization rules offered, and you can still provide your own.

[/ezcol_1half]

[ezcol_1half_end]

<urlNormalizer class="com.norconex.collector.http.url.impl.GenericURLNormalizer">

<normalizations>

removeFragment, lowerCaseSchemeHost, upperCaseEscapeSequence,

decodeUnreservedCharacters, removeDefaultPort,

encodeNonURICharacters, removeTrailingSlash

</normalizations>

</urlNormalizer>

[/ezcol_1half_end]

Prevent sitemap detection attempts

[ezcol_1half]

By default StandardSitemapResolverFactory is enabled and tries to detect whether a sitemap file exists at the “/sitemap.xml” or “/sitemap_index.xml” URL path. For websites without sitemaps files at these location, this creates unnecessary HTTP request failures. It is now possible to specify an empty “path” so that such discovery does not take place. In such case, it will rely on sitemap URLs explicitly provided as “start URLs” or sitemaps defined in “robots.txt” files.

[/ezcol_1half]

[ezcol_1half_end]

<sitemapResolverFactory> <path/> </sitemapResolverFactory>

[/ezcol_1half_end]

Count occurrences of matching text

[ezcol_1half]

Thanks to the new CountMatchesTagger, it is now possible to count the number of times any piece of text or regular expression occurs in a document content or one of its fields. A sample use case may be to use the obtained count as a relevancy factor in search engines. For instance, one may use this new feature to find out how many segments are found in a document URL, giving less importance to documents with many segments.

[/ezcol_1half]

[ezcol_1half_end]

<tagger class="com.norconex.importer.handler.tagger.impl.CountMatchesTagger">

<countMatches

fromField="document.reference"

toField="urlSegmentCount"

regex="true">

/[^/]+

</countMatches>

</tagger>

[/ezcol_1half_end]

Multiple date formats

[ezcol_1half]

DateFormatTagger now accepts multiple source formats when attempting to convert dates from one format to another. This is particularly useful when the date formats found in documents or web pages are not consistent. Some products, such as Apache Solr, usually expect dates to be of a specific format only.

[/ezcol_1half]

[ezcol_1half_end]

<tagger class="com.norconex.importer.handler.tagger.impl.DateFormatTagger"

fromField="Last-Modified"

toField="solr_date"

toFormat="yyyy-MM-dd'T'HH:mm:ss.SSS'Z'">

<fromFormat>EEE, dd MMM yyyy HH:mm:ss zzz</fromFormat>

<fromFormat>EPOCH</fromFormat>

</tagger>

[/ezcol_1half_end]

DOM enhancements

[ezcol_1half]

DOM-related features just got better. First, the DOMTagger, which allows one to extract values from an XML/HTML document using a DOM-like structurenow supports an optional “fromField” to read the markup content from a field instead of the document content. It also supports a new “defaultValue” attribute to store a value of your choice when there are no matches with your DOM selector. In addition, now both DOMContentFilter and DOMTagger supports many more selector extraction options: ownText, data, id, tagName, val, className, cssSelector, and attr(attributeKey).

[/ezcol_1half]

[ezcol_1half_end]

<tagger class="com.norconex.importer.handler.tagger.impl.DOMTagger">

<dom selector="div.contact" toField="htmlContacts" extract="html" />

</tagger>

<tagger class="com.norconex.importer.handler.tagger.impl.DOMTagger"

fromField="htmlContacts">

<dom selector="div.firstName" toField="firstNames"

extract="ownText" defaultValue="NO_FIRST_NAME" />

<dom selector="div.lastName" toField="lastNames"

extract="ownText" defaultValue="NO_LAST_NAME" />

</tagger>

[/ezcol_1half_end]

More control of embedded documents parsing

[ezcol_1half]

GenericDocumentParserFactory now allows you to control which embedded documents you do not want extracted from their containing document (e.g., do not extract embedded images). In addition, it also allows you to control which containing document you do not want to extract their embedded documents (e.g., do not extract documents embedded in MS Office documents). Finally, it also allows you now to specify which content types to “split” their embedded documents into separate files (as if they were standalone documents), via regular expression (e.g. documents contained in a zip file).

[/ezcol_1half]

[ezcol_1half_end]

<documentParserFactory class="com.norconex.importer.parser.GenericDocumentParserFactory">

<embedded>

<splitContentTypes>application/zip</splitContentTypes>

<noExtractEmbeddedContentTypes>image/.*</noExtractEmbeddedContentTypes>

<noExtractContainerContentTypes>

application/(msword|vnd\.ms-.*|vnd\.openxmlformats-officedocument\..*)

</noExtractContainerContentTypes>

</embedded>

</documentParserFactory>

[/ezcol_1half_end]

Document parsers now XML configurable

[ezcol_1half]

GenericDocumentParserFactory now makes it possible to overwrite one or more parsers the Importer module uses by default via regular XML configuration. For any content type, you can specify your custom parser, including an external parser.

[/ezcol_1half]

[ezcol_1half_end]

<documentParserFactory class="com.norconex.importer.parser.GenericDocumentParserFactory">

<parsers>

<parser contentType="text/html"

class="com.example.MyCustomHTMLParser" />

<parser contentType="application/pdf"

class="com.norconex.importer.parser.impl.ExternalParser">

<command>java -jar c:\Apps\pdfbox-app-2.0.2.jar ExtractText ${INPUT} ${OUTPUT}</command>

</parser>

</parsers>

</documentParserFactory>

[/ezcol_1half_end]

More languages detected

[ezcol_1half]

LanguageTagger now uses Tika language detection, which supports at least 70 languages.

[/ezcol_1half]

[ezcol_1half_end]

<tagger class="com.norconex.importer.handler.tagger.impl.LanguageTagger"> <languages>en, fr</languages> </tagger>

[/ezcol_1half_end]

What else?

Other changes and stability improvements were made to this release. A few examples:

- New “checkcfg” launch action that helps detect configuration issues before an actual launch.

- Can now specify “notFoundStatusCodes” on GenericMetadataFetcher.

- GenericLinkExtractor no longer extracts URL from HTML/XML comments by default.

- URL referrer data is now always preserved by default.

To get the complete list of changes, refer to the HTTP Collector release notes, or the release notes of dependent Norconex libraries such as: Importer release notes and Collector Core release notes.

Useful links

- Download Norconex HTTP Collector

- Get started with Norconex HTTP Collector

- Report your issues and questions on Github

- Norconex HTTP Collector Release Notes

As the Internet grows, so does the demand for better ways to extract and process web data. Several commercial and open-source/free web crawling solutions have been available for years now. Unfortunately, most are limited by one or more of the following:

As the Internet grows, so does the demand for better ways to extract and process web data. Several commercial and open-source/free web crawling solutions have been available for years now. Unfortunately, most are limited by one or more of the following: